AIT Protocol - A Revolutionary Approach to Labeling and Processing Big Data

Hey, friends!

We are still in a bull market even though we have “cooled off” a little bit.

In earlier cycles we have sold off a little bit before the halving. Will this time be different?

Anyway, today’s newsletter is a sponsored deep dive of AIT Protocol, which falls under the AI narrative ( a sector I think is massively undervalued, and I am pretty sure that most coins will be 10x at the end of this cycle from today’s prices).

All right. Let’s jump right to it!

AIT Protocol - A Revolutionary Approach to Labeling and Processing Big Data

AIT Protocol is a Web3/AI-native company with over 100,000 wallets connected to its dapp.

Let’s start with a TLDR about AIT.

AIT Protocol’s goal is to enable the seamless integration of artificial intelligence into various industries by delivering high-quality data annotation and AI model training services that empower businesses and researchers to harness the full potential of AI.

AIT Protocol will do this by providing the tools and knowledge needed to make AI accessible to all.

Introduction

The AIT Protocol leverages the power of blockchain technology to create a decentralized labor market that transcends international boundaries. The AIT decentralized marketplace offers users to partake in "Train-to-Earn" tasks, a concept that simultaneously enables them to earn rewards while actively contributing to the progression of AI models and the development of cutting-edge solutions.

This vision is fueled by the ever-growing demand for top-tier structured data within the sphere of AI application development. For millions of data labelers, AIT serves as their inaugural entry point into the exciting realm of web3 technology, marking a significant step forward in embracing this transformative era of digital innovation and opportunity.

This wouldn’t be possible without some key team members:

- CEO | Shin Do: Co-Founder at Megala Ventures, Co-Founder at Heros & Empires, gamefi with 2M downloads on iOS, Strong connection with web3 founders and ecosystem.

- CTO | Tony Le: Co-founder of PharmApp Tech Inc., Solutions designed with more than 60 data-driven strategies, Google Cloud Professional Machine Learning Engineer, Google Cloud Professional Data Engineer, SnowPro Certified, MIT alumni in Data Science & Machine Learning program

Okay, anon. I know you think stuff like this can get advanced.

So let’s explain AIT in an ELI5-way.

AI can not function without data. You’ve probably seen that companies in the web2 world such as Microsoft, IBM, Amazon, etc. are crazy about it.

The reason? Well, when developing and launching their products, small AI start-ups face formidable obstacles. The struggle to manage massive data sets is real. It is costly, time-consuming, and requires highly skilled personnel, including highly compensated data scientists.

This is where AIT Protocol comes in.

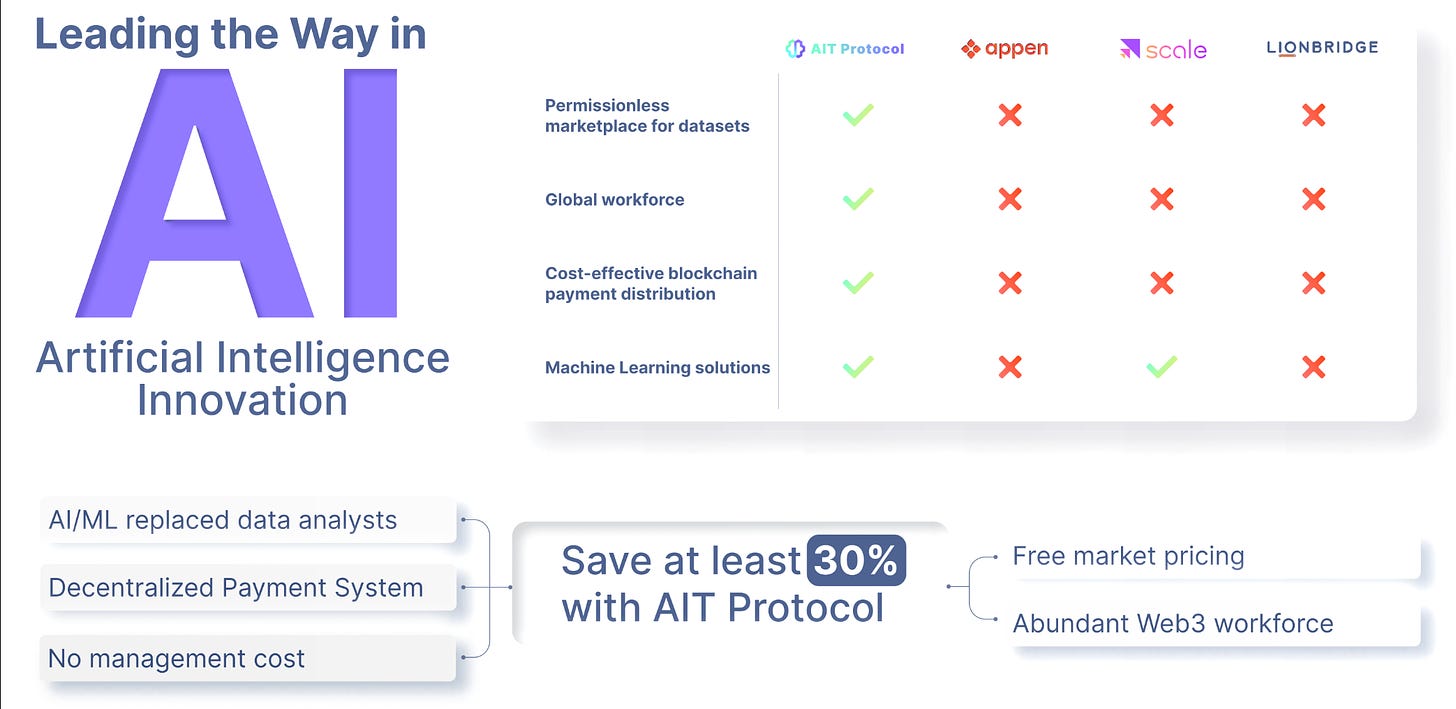

The AIT Protocol is a revolutionary approach to processing and analyzing big data, aiming to address the challenges small AI start-ups face. The protocol combines the power of machine learning with the expertise of real humans to create a more efficient and cost-effective solution.

By leveraging the abundant resources of the blockchain community and the innovative “Train-To-Earn” model, the AIT Protocol taps into a large pool of users who are willing to provide their expertise to the project. This enables the protocol to keep costs low while still delivering high-quality results.

The AIT Protocol eliminates the need for expensive intermediaries by connecting AI technology initiatives directly with users who classify and analyze massive amounts of data. This saves time and money while ensuring that only qualified individuals process the data.

The AIT Protocol is also intended to be self-improving. As machine learning algorithms become more intelligent, they can take on more and more work, eventually will minimize the human workloads.

Consequently, the AIT Protocol is not only a cost-effective solution, but also a highly scalable one.

AIT Protocol’s 6 focus areas

Excellence in Data Annotation Services: AIT Protocol prides itself on delivering precise and comprehensive data annotation services. High-quality training data is the bedrock of successful AI models. The team is dedicated to labeling, tagging, and annotating data with meticulous attention to detail, ensuring that AI algorithms are trained on the most accurate and reliable information available.

Tailored AI Model Training: One-size-fits-all solutions do not work in the world of AI. AIT Protocol focuses on customizing AI model training to suit the unique needs of each client. Whether it's natural language processing, computer vision, or recommendation systems, they fine-tune their training methodologies to ensure optimal performance.

Ethical Data Annotation: AIT Protocol is deeply committed to ethical data annotation practices. They prioritize privacy and security, ensuring that all data annotation complies with the highest ethical standards and data protection regulations.

Innovation and Research: The team is dedicated to staying at the forefront of AI research by continually exploring new techniques, technologies, and methodologies to enhance the accuracy and efficiency of data annotation and AI model training.

Collaboration and Knowledge Sharing: AIT Protocol understands the collaborative nature of AI development. They actively engage with their clients, partners, and the wider AI community to share knowledge and insights.

Accessibility and Affordability: They are committed to making AI technology accessible to a wide range of businesses and researchers. AIT Protocol offers free-market pricing models and scalable solutions to ensure that organizations of all sizes can benefit from our expertise.

In summary, AIT Protocol's vision is to lead the AI revolution, its mission is to empower businesses and researchers, and its focus is on providing top-tier data annotation services and AI model training while upholding ethical standards and promoting innovation.

Ecosystem & Partnerships

AIT Protocol has partnered up with lots of solid projects in the space. Honorable mentions are LayerZero, OKX Chain, zkSync, Monad, Shardeum, Coin98, PaalAI, MetaBros, and General TAO Ventures.

General TAO Ventures is a super existing partnership, so let’s talk a little bit more about that one.

Partnership of AIT Protocol and General TAO Ventures and: A New Era for the Bittensor Network

This collaboration has led to the creation of one of the first 32 subnets in the Bittensor ecosystem, and the very first subnet developed by a native web3 company, a testament to the innovative spirit and forward-thinking approach of both companies.

GTV is committed to pushing the boundaries of incentivized, distributed machine learning. Their product-centric approach focuses on platforms that not only maximize participation within the Bittensor network but also empower end-users to contribute meaningful value.

Einstein-AIT (Subnet 3): A Convergence of Capabilities

The partnership between GTV and AIT has given birth to a subnet that is poised to redefine the capabilities of the Bittensor network. This subnet is designed to optimize response accuracy by enabling a language model to autonomously write, test, and execute code within unique Python environments. The result is a platform that not only provides precise and practical responses but also significantly enhances the accuracy and quality of responses network-wide.

The AIT-GTV subnet (SN3) is a robust and reliable domain dedicated to complex mathematical operations and logical reasoning. It will empower startups, enterprises and even other Bittensor subnets by offering advanced mathematical computation through our own proprietary models, as well as user-friendly APIs.

The partnership’s vision of creating a permissionless and decentralized service aligns perfectly with Bittensor’s core values, fostering an environment where innovation is powered by the collective strength and diversity of its participants.

Real-World Impact and Future Growth

The real-world applications of this partnership are vast and varied. From scientific research to education, programming, and even law, the subnet’s capabilities can be leveraged to drive progress and solve real-world problems. The ‘Train-to-Earn’ model also ensures that as users contribute to the AI’s enhancement, they are rewarded, creating a virtuous cycle of growth and development.

Looking ahead, the roadmap for the GTV and AIT partnership includes a series of strategic phases designed to maximize the potential of the subnet. From deploying a Supplementary Incentive Model (SIM) for miners, validators, and even end-users (another ‘first’ on the Bittensor network), to fostering a competitive environment and developing community-driven applications, the future is bright for this collaborative venture.

Through their partnership, they’ve launched 1 of the first 32 subnets on the Bittensor ecosystem. They are developing a subnetwork that will improve the response accuracy for LLMs and maximize rewards for miners on Bittensor.

They're aiming to help the Bittensor network achieve the Gold Standard for LLMs: The Einstein-AIT subnet will act as a supercharger for other LLMs on TAO.

In other words, AIT is entering the subnet horse race: $TAO growth = $AIT growth

AIT tapping into a network of networks that will provide Data annotation jobs for our global workforce

AIT will earn $TAO from subnet operations which will be re-invested back into $AIT growth and community through:

-$AIT token buybacks

-Platform development

-Growth of the $AIT token holder base

-User incentives

All right, anon.

We have covered a lot of background info about AIT Protocol, but maybe you have asked yourself the question: what are the problems they’re actually solving?

Let’s start looking into the problems, and then in the next section see how AIT is solving this.

Problems & Challenges

Data processing is the linchpin of high-quality AI applications, and the accuracy of this data annotation process is crucial. However, the traditional methods employed in data annotation are now struggling to meet the demands of the ever-expanding AI landscape.

Two primary challenges confront this conventional paradigm: inefficiency and high costs.

Inefficiency

The conventional approach to data annotation mirrors the construction of a pyramid, heavily dependent on manual labor. This labor-intensive method, while once the standard, now proves inadequate in the face of the swift evolution of AI technologies. The inefficiencies inherent in this outdated process act as bottlenecks, creating a ripple effect that not only consumes valuable time but also hinders the seamless development of AI applications.

Adding to these challenges is the lack of web3-knowledgeable labelers, further exacerbating the limitations of traditional annotation methods. As the AI landscape continues to advance, the absence of expertise in the unique intricacies of web3 compounds the delays and constraints faced by projects.

High cost

Several factors contribute to the high costs associated with traditional data annotation methods. Firstly, acquiring skilled annotators is challenging and costly.

The availability of labor pools with the requisite expertise can be limited, leading to competitive labor markets and escalating wages. Onboarding new workers also consumes resources and increases costs. Moreover, the conventional payment terms add to the expenses. These high costs can be categorized as follows:

Extra Cost for Incorrect Labels: Mistakes made during the annotation process can lead to costly revisions, as data accuracy is paramount for AI applications. Correcting errors adds to the expense and extends project timelines.

Expensive Manual Data Collection: Data collection often necessitates hiring human labor, which can be a costly and time-consuming endeavor, especially for large-scale datasets.

Human/Labor-Intensive Labeling: Relying solely on human annotators results in labor-intensive processes that are prone to bottlenecks and inefficiencies, making it challenging to keep up with the pace of AI development.

AIT Protocol’s solution to the problem

AIT’s solutions represent a paradigm shift in data annotation, addressing the inefficiency and high costs that have plagued traditional methods.

By harnessing the power of HITL (more of that in the next section), a web3 global workforce, streamlined onboarding, and a permissionless marketplace, AIT are paving the way for a future of intelligent data processing that is not only more cost-effective but also more efficient and accessible to a global audience.

Human-In-The-Loop (HITL)

Their Human-In-The-Loop (HITL) approach stands as a harmonious blend of human expertise and cutting-edge machine-learning capabilities.

By synergizing human intuition with the efficiency of artificial intelligence, they not only diminish the dependence on human labor but also substantially enhance the overall efficiency of the labeling process. This strategic collaboration empowers companies to seamlessly meet the escalating demands of AI development.

Web3 Global Workforce

We're dismantling geographical and accessibility barriers, ushering in an era where anyone with an internet connection can seamlessly contribute to data annotation tasks. This transformative approach not only transcends traditional constraints but also grants companies access to an affordable, 24/7 global workforce.

By harnessing the combined power of the internet and blockchain technology, they forge a decentralized, borderless workforce capable of processing data efficiently and cost-effectively.

Streamlined Onboarding & Cross-Border Payments

Their commitment to user-friendly engagement begins with the implementation of robust Know Your Customer (KYC) processes, ensuring a secure and trustworthy platform. They've taken a stride further by simplifying onboarding procedures, making it remarkably easy for workers to seamlessly join their platform.

Permissionless Marketplace

The AIT platform empowers companies, projects, and individuals alike to craft their own data annotation tasks, paving the way for a dynamic marketplace where users are rewarded for contributing to the labeling of datasets.

This permissionless environment sparks healthy competition and fosters innovation, driving the creation of cost-effective solutions.

How do web3 data annotations work?

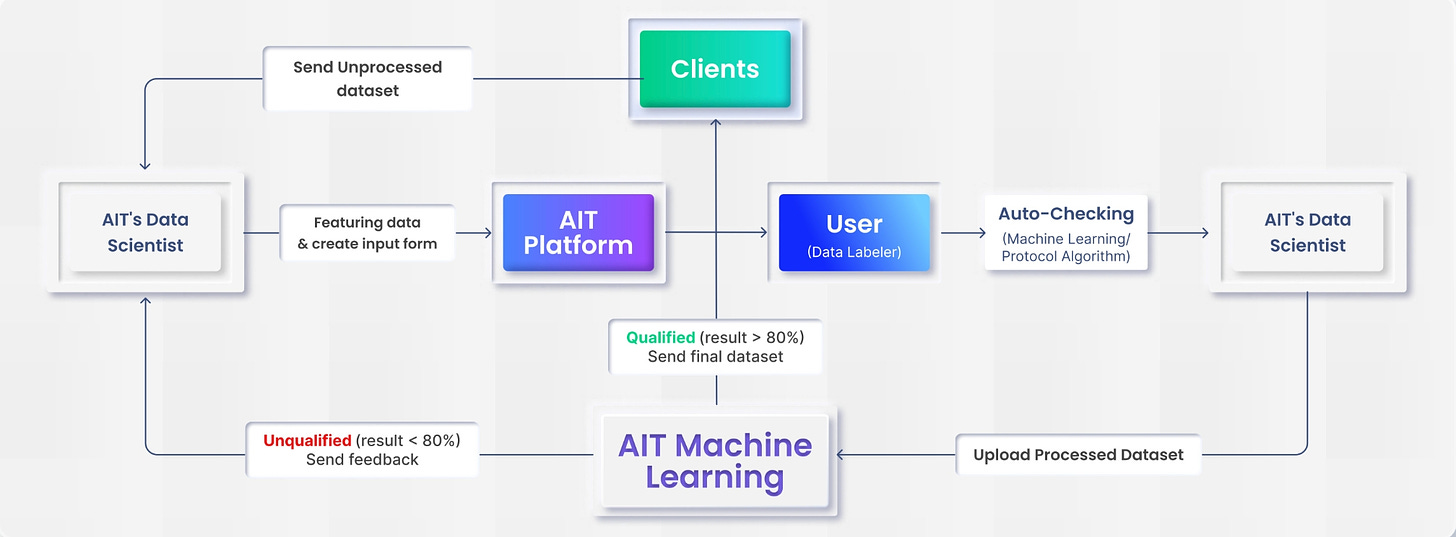

We will look at the figure below to understand this. Here comes an explanation:

The journey begins with a team of expert data scientists, poised to pre-label the original datasets provided by our valued clients (left on the figure).

These initial labels serve as the foundation, undergoing a transformative refinement process powered by our vibrant user community and reinforced by state-of-the-art machine learning algorithms.

The final dataset undergoes a meticulous validation phase led by data scientists, ensuring the highest levels of accuracy and quality. This validation process serves as the hallmark of delivering reliable data.

This curated dataset is not just an endpoint; it's the beginning of empowering the customers. As you can see the process is a continuous one, and iterations after iterations are done to make the product as good as possible.

Dataset as a Service (DaaS)

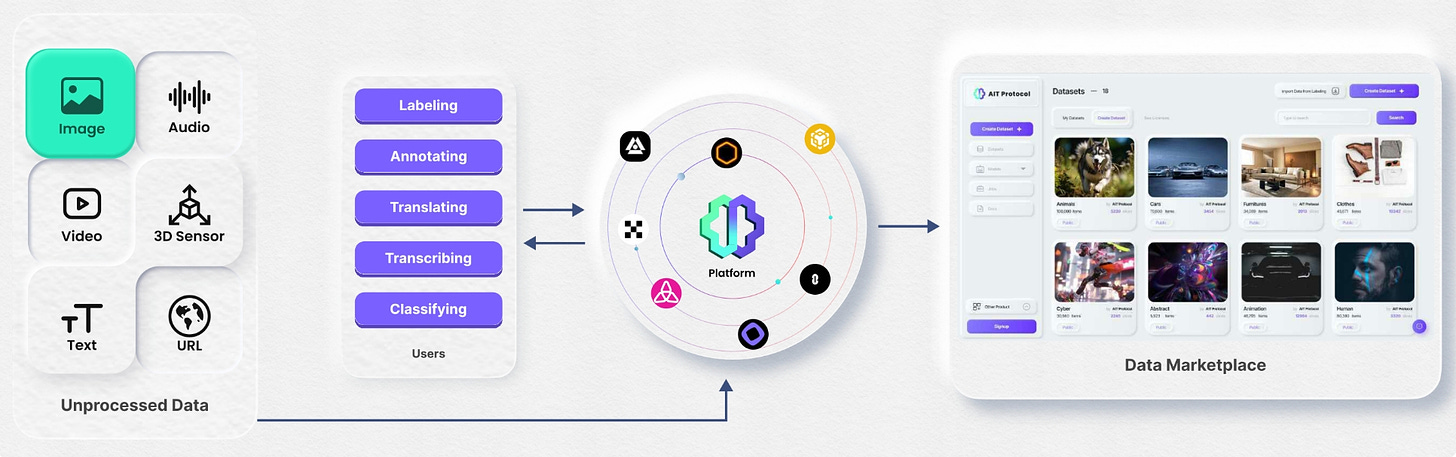

In the ever-evolving landscape of decentralized technologies, AIT Protocol stands at the forefront, introducing a revolutionary force—The AIT Protocol Marketplace (illustration below).

Spanning a spectrum from blockchain analytics to decentralized application data, these datasets that the scientists upload undergo a meticulous processing phase, driven by the collective power of the AIT community.

This collaborative effort unlocks the true potential of each dataset, unraveling valuable insights and applications.

These datasets are presented to subscribers on the AIT Protocol Data Marketplace, a dynamic nexus attracting data enthusiasts, researchers, and businesses.

This marketplace transcends traditional data exchange paradigms, connecting data providers with those in need, and fostering a vibrant ecosystem where the true value of data is fully realized.

Custom AI Solutions

AIT Protocol perceive the creation of custom AI for company-specific needs as a strategic investment that can transform the business by harnessing the full potential of artificial intelligence.

Thanks to the utilization of web3 technology and the abundant crypto workforce, the expense associated with creating bespoke AI solutions has reached an unprecedented level of affordability and accessibility.

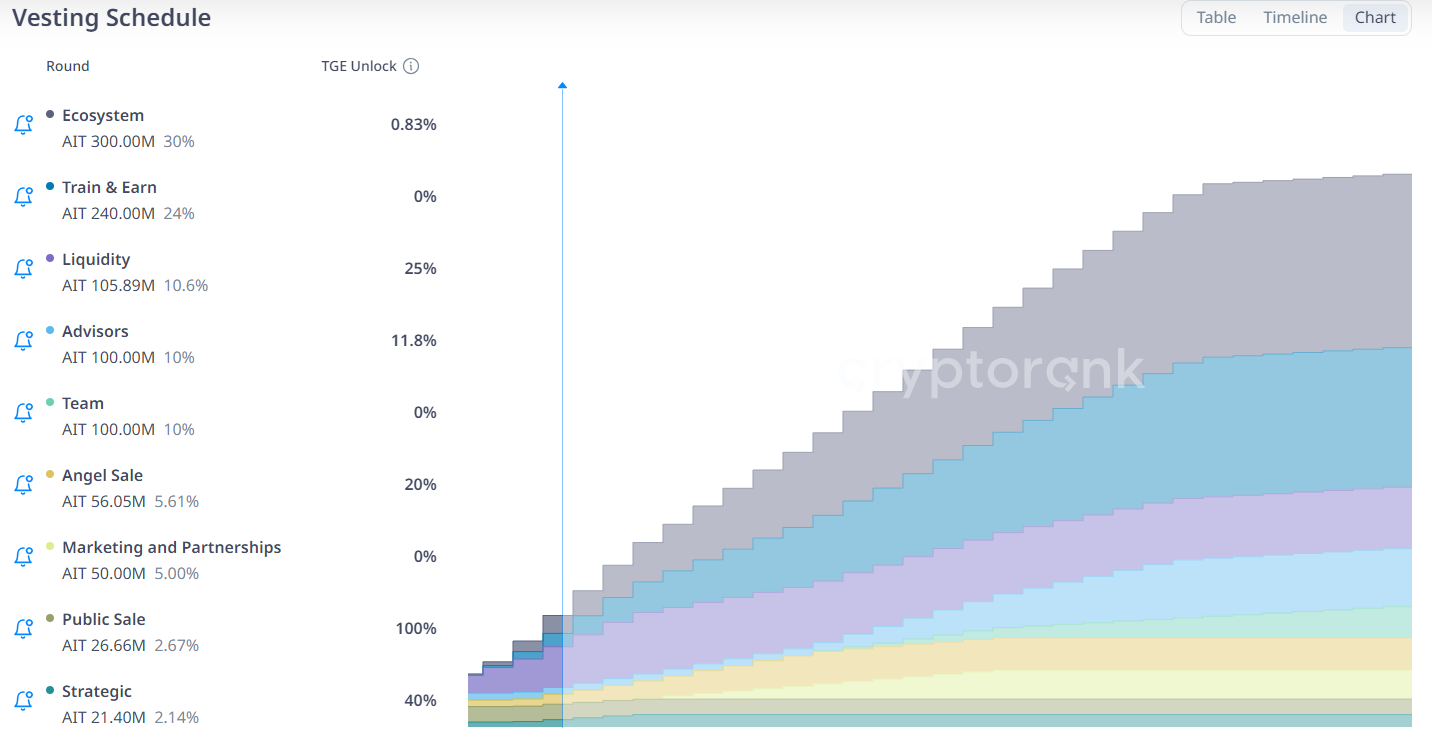

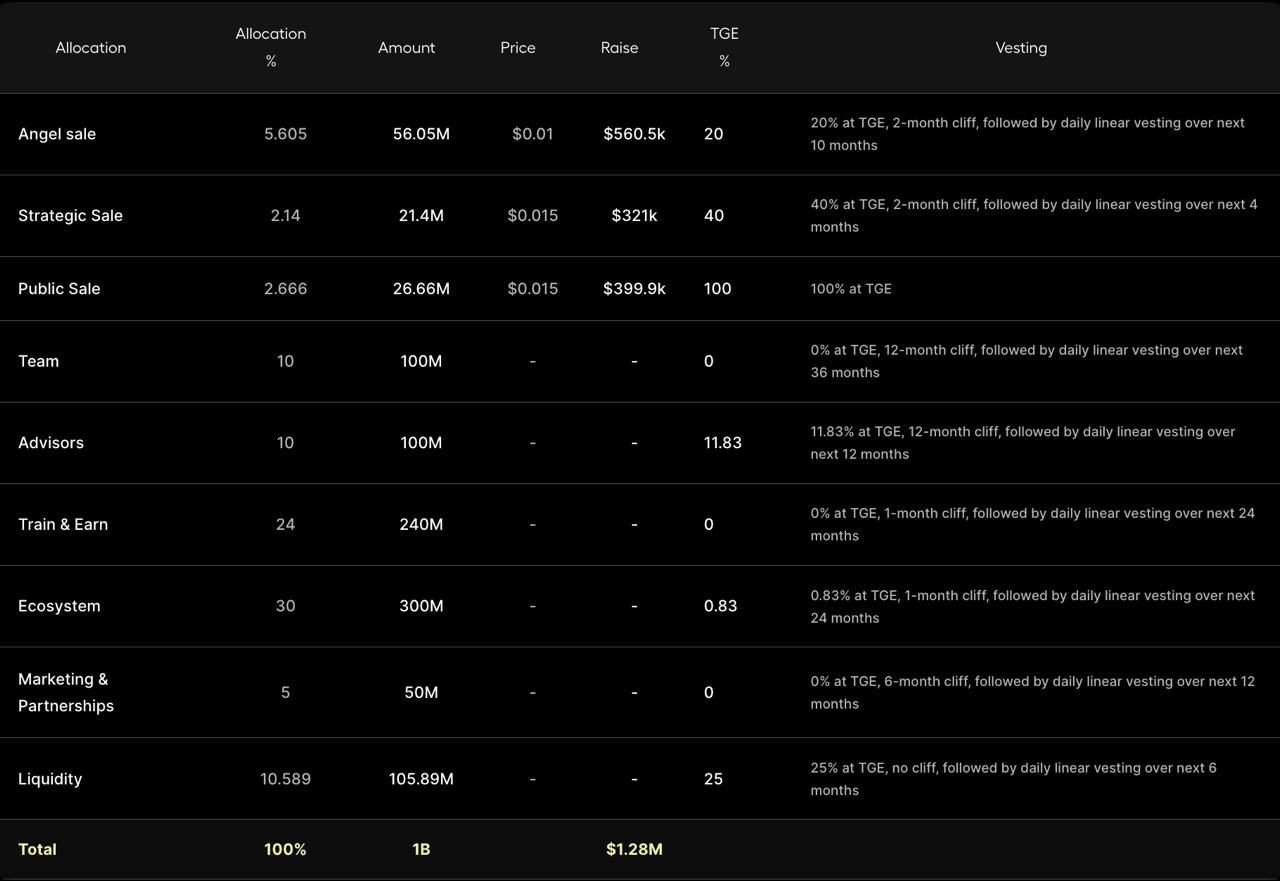

Token distribution and tokenomics

$AIT is the native and governance token of the AIT Protocol ecosystem, with a fixed supply of 1 billion tokens. It provides access to the AIT protocol features.

$AIT serves as the platform currency to pay for Marketplace’s subscription fees, data processing, AI rental, and Launchpad.

Here is the vesting schedule:

Roadmap

Q1 - 2024

Marketing Campaigns

AIT Moderation Bot - Product Live on Telegram (MaaS)

AIT Data Tracking Bot - Product Demo

Data Validation Platform Live

Web3 Clients Onboarding

Q2 - 2024

Dataset as a Service (DaaS)

AI Data Marketplace

AIT Data Tracking Bot - Product Live on Telegram

More Blockchain Integrations

Q3 - 2024

Web3 AI Solutions Providing (Company Specific)

You can read more details here: https://medium.com/@nnehan796/ait-protocols-journey-unveiled-91b94590a729

Conclusion

In recent years, the use of large datasets in the development of artificial intelligence (AI) has been widely acknowledged. In fact, it has been argued that AI could not function without data.

The market size for Big Data Analytics is anticipated to reach $271.83 billion by 2022, which is a significant figure. However, it is a playing field for the big tech giants such as Microsoft, IBM, Amazon, among others. When developing and launching their products, small AI start-ups face formidable obstacles.

The struggle to manage massive data sets is real. It is costly, time-consuming, and requires highly skilled personnel, including highly compensated data scientists.

This is where AIT Protocol comes in.

The AIT Protocol is a revolutionary approach to processing and analyzing big data, aiming to address the challenges small AI start-ups face. The protocol combines the power of machine learning with the expertise of real humans to create a more efficient and cost-effective solution.

In conclusion, the AIT Protocol provides a next-generation big data analytics platform that enables small start-ups to surmount the formidable obstacles they encounter during product development and launch. Its inventive approach combines the power of machine learning with the expertise of actual humans, and has the potential to revolutionize the processing and analysis of large data.

And, PS! As a last thing here, let’s just take one second to study the price chart here.

The price is up over 800% in the last 3 months.

As a trend trader myself I like tokens that are showing strength, and if you are bullish on AI as a narrative (I certainly am), then this token which sits at a 60m mcap is probably quite undervalued if you think in a long-term perspective.

NFA ofc.

All right, I guess that’s it for today.

See you around, anon!

Stay bullish.

Want To Sponsor This Newsletter? 🕴️

Send me a DM on Twitter: https://twitter.com/Route2FI or reply to this email. I have a sponsorship deck I can send you.

Join My Free Telegram Channel 🐸

I’ve launched a free Telegram channel where I share tweets, threads, articles, trades, blog posts, etc. that I find interesting within crypto.

Join it for free here: https://t.me/cryptogoodreads